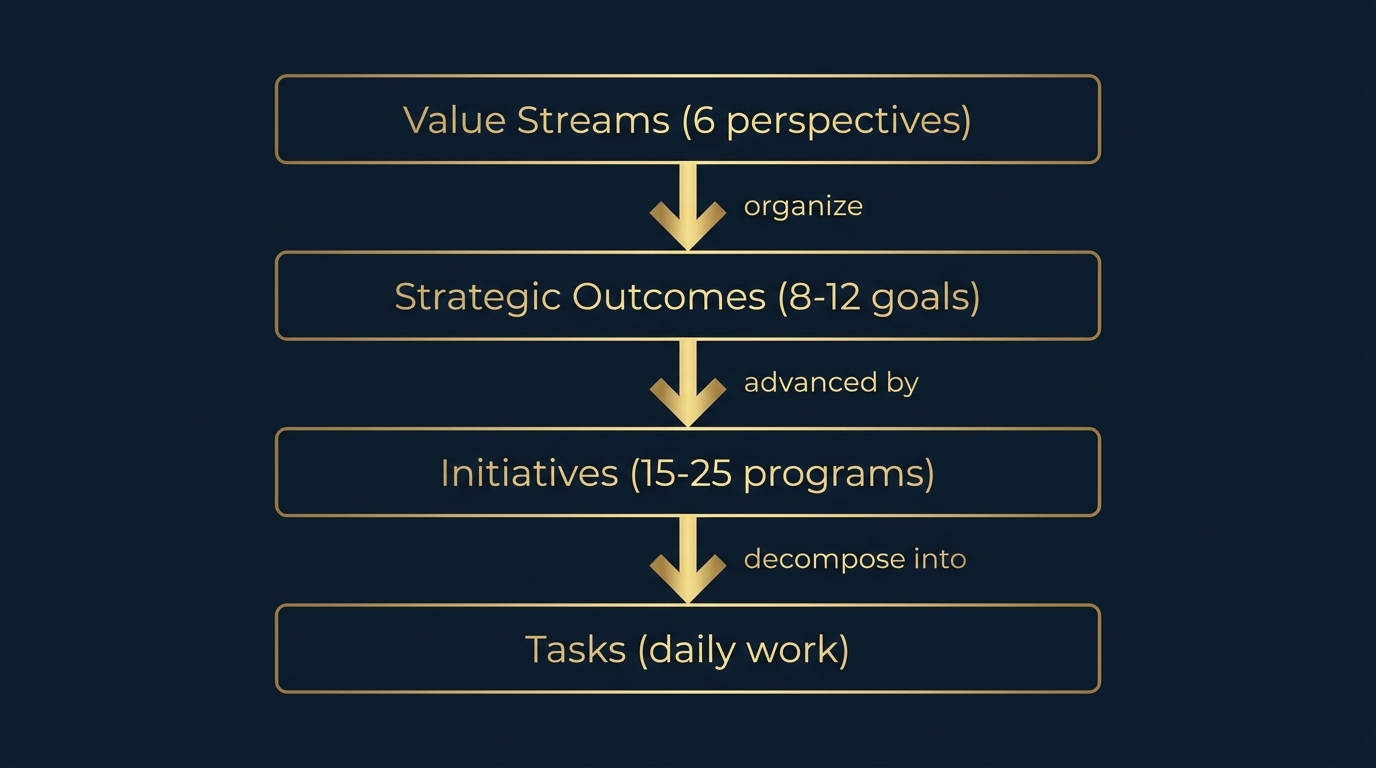

Strategic Outcomes Framework

A four-layer hierarchy connecting daily tasks to strategic purpose. Value Streams organize the portfolio, Strategic Outcomes define success, Initiatives bound the programs, and Tasks do the work—with explicit linkage at every level.

The Four-Layer Hierarchy

Every task in a well-governed studio can answer “why am I doing this?” in two sentences. Not through inspiration or storytelling—through structure.

The Strategic Outcomes Framework provides this structure through four layers:

Each layer has a distinct purpose, a distinct governance rhythm, and explicit linkage to the layers above and below.

Layer 1: Value Streams

Value Streams provide the portfolio perspective. Adapted from the Balanced Scorecard, they ensure the studio invests across multiple dimensions of success—not just revenue, not just brand, but a balanced portfolio.

The Six Value Streams

The outcomes and metrics below are illustrative examples—adapt thresholds to your context.

| Value Stream | Purpose | Example Outcomes |

|---|---|---|

| 💰 Financial Performance | Revenue, sustainability, margin | ”Recurring revenue exceeds £50K annually” |

| 🖼️ Brand & Cultural Capital | Reputation, authority, positioning | ”Recognized authority in luxury automotive photography” |

| 🤝 Client & Collector Delivery | Client outcomes, satisfaction, retention | ”NPS above 8 for all completed commissions” |

| 🧪 Innovation & R&D | New capabilities, validated experiments | ”Three validated frameworks published” |

| ⚙️ Operations & Capability | Efficiency, tooling, process quality | ”Decision latency below 10 minutes” |

| 📚 Learning & Knowledge | Skills, documentation, institutional memory | ”Core methodologies documented and transferable” |

Portfolio Balance Review

Monthly, review the distribution of active initiatives across value streams:

Healthy pattern: No single stream holds more than 40% of active initiatives. Financial and Brand together don’t exceed 60% (prevents over-commercialization). R&D and Learning together hold at least 20% (ensures future capability).

Warning signs:

- All initiatives in Financial Performance → optimizing for short-term revenue, starving brand and capability

- All initiatives in R&D → building without shipping, no market validation

- Zero in Learning → no documentation, knowledge loss when people leave

Layer 2: Strategic Outcomes

Strategic Outcomes are the goals layer—specific future states to achieve in 12-24 months. They’re more concrete than vision, more durable than quarterly OKRs.

Outcome Properties

| Property | Purpose | Example |

|---|---|---|

| Name | Clear, outcome-focused statement | ”Generate £50K recurring annual revenue” |

| Outcome Type | Categorizes for indicator selection | Revenue, Brand Recognition, Capability Build |

| Value Stream | Portfolio perspective (can be multiple) | Financial Performance + Client Delivery |

| Status | Lifecycle stage | Planning, Active, At Risk, Achieved, Deferred |

| Target Date | When success is expected | Q4 2026 |

| Target Metric | How success is measured | ”ARR at least £50,000” |

| Progress Notes | Narrative updates | ”Q3: 3 commissions closed, pipeline building” |

Outcome Types

Different outcome types require different indicator patterns:

| Type | Leading Indicators | Lagging Indicators |

|---|---|---|

| Revenue | Qualified inquiries, pipeline value, proposal win rate | Actual revenue, ARR growth |

| Client Acquisition | Outreach volume, response rate, meeting conversions | New clients acquired |

| Brand Recognition | Content engagement, share of voice, press mentions | Inbound inquiry source attribution |

| Capability Build | Training hours, documentation coverage, tool adoption | Process efficiency, error rates |

| Knowledge Validation | Framework downloads, citation count, feedback quality | Consulting inquiries, speaking invitations |

| Operational Efficiency | Decision latency, cycle time, WIP levels | Throughput, burnout signals |

How Many Outcomes?

Recommended: 8-12 active outcomes across all value streams.

Fewer than 8 suggests under-specification—outcomes too broad to be actionable. More than 12 suggests over-specification—unable to focus.

Each outcome should be achievable in 12-24 months. Longer timeframes need intermediate milestones. Shorter timeframes are probably initiatives, not outcomes.

Layer 3: Initiatives

Initiatives are the programs layer—bounded efforts that advance strategic outcomes. They’re more concrete than outcomes, more strategic than tasks.

Initiative Types

Four types cover most creative studio work:

| Type | Description | Example | Typical Duration |

|---|---|---|---|

| Campaign | Marketing/content programs with defined scope | ”Multi-Clock Framework Authority” | 6-12 weeks |

| Client Work | Client engagements and commissions | ”Pilot Commission” (internal project name) | 2-8 weeks |

| R&D | Research and experimental development | ”AI Governance Protocol Development” | 4-16 weeks |

| Internal Build | Process/capability improvements | ”MIR System Implementation” | 2-6 weeks |

Initiative Properties

| Property | Purpose | Example |

|---|---|---|

| Name | Descriptive identifier | ”Multi-Clock Framework Authority Campaign” |

| Type | Governs appropriate patterns | Campaign |

| Status | Lifecycle stage | Planning, Active, On Hold, Complete, Archived |

| Strategic Outcomes | Which outcomes this advances (relation) | “Establish thought leadership in creative governance” |

| Timeline | Start and end dates | Nov 3 – Dec 14, 2025 |

| Success Criteria | How we know it worked | ”5 qualified consulting inquiries” |

| Approach | High-level method | ”Weekly content across 3 platforms, anchor-and-adapt pattern” |

| Owner | Accountable person | Andy |

Outcome-Initiative Linkage

In practice, we don’t create initiatives unless they link to at least one strategic outcome. This is the critical connection that ensures work serves purpose.

Questions to ask before creating an initiative:

- Which outcome(s) does this advance?

- How will we know if it worked?

- What’s the kill signal if it’s not working?

- Do we have capacity for this given existing initiatives?

If you can’t answer these questions, consider whether the initiative is ready. The discipline of linkage helps prevent initiative sprawl.

Initiative Portfolio View

Visualize initiatives by outcome:

Strategic Outcome: "Generate £50K recurring annual revenue"

├── Campaign: "After-Image Prospect Marketing" (Active)

├── Client Work: "Pilot Commission" (Complete)

├── Client Work: "First Paid Commission" (Active)

└── Internal Build: "Commission Pipeline Automation" (Planning)

Strategic Outcome: "Establish thought leadership in creative governance"

├── Campaign: "Multi-Clock Framework Authority" (Active)

├── R&D: "Governance Protocols Paper" (Active)

└── Internal Build: "Codex Publication System" (Complete)This view reveals:

- Which outcomes have supporting initiatives

- Which outcomes are under-resourced (no active initiatives)

- Which outcomes are over-invested (too many competing initiatives)

Layer 4: Tasks

Tasks are the execution layer—the daily work tracked in MIR (Multi-Clock Idea Register). The integration with the Strategic Outcomes Framework adds one critical property:

Initiative relation: Every task links to an initiative.

This completes the golden thread:

Task: "Edit photos from pilot commission"

↑ belongs to

Initiative: "Pilot Commission" (Type: Client Work)

↑ advances

Strategic Outcome: "Validate Anachrome Protocol with paying client"

↑ contributes to

Value Stream: 🤝 Client & Collector DeliveryOrphan Tasks

Tasks without initiative linkage are orphans. They fail governance:

What to do with orphans:

- Rehome: Does this belong to an existing initiative? Link it.

- Promote: Does this represent a new initiative? Create the initiative first, then link.

- Kill: Does this serve no outcome? Delete it.

Default rule: Tasks should link to an initiative. The discipline of linkage helps prevent task sprawl and keeps work connected to purpose.

The Golden Thread in Practice

Tracing Up: Why Am I Doing This?

From any task, trace the golden thread:

Task: “Write Week 3 LinkedIn post for MCF campaign”

Why?

→ It’s part of Initiative: “Multi-Clock Framework Authority Campaign”

Why does that initiative exist?

→ It advances Outcome: “Establish thought leadership in creative governance”

Why does that outcome matter?

→ It contributes to Value Streams: Innovation & R&D + Brand & Cultural Capital

Why do those value streams matter?

→ They’re part of a balanced portfolio that ensures long-term studio health

In two sentences: “This post builds authority in creative governance methodology, which drives consulting inquiries and brand recognition—both strategic priorities for this quarter.”

Tracing Down: How Do We Achieve This?

From any outcome, trace the implementation path:

Outcome: “Generate £50K recurring annual revenue”

How will we achieve this?

→ Through these Initiatives:

- “After-Image Prospect Marketing” (driving inquiries)

- “Commission Pipeline” (converting inquiries)

- “Retention Program” (keeping clients)

What specific work advances each initiative?

→ Check the Tasks linked to each initiative in MIR

What’s the current status?

→ 15 active tasks across 3 initiatives, 2 blocked, 1 at risk

The hierarchy enables both strategic oversight (top-down) and tactical execution (bottom-up).

Indicator Framework

Three Levels of Measurement

Outcome Indicators (Monthly review)

- Target metrics for the outcome itself

- Primarily lagging indicators

- Example: “ARR growth rate”

Initiative Indicators (Weekly review)

- Progress metrics for the program

- Mix of leading and lagging

- Example: “Qualified inquiries this week”

Task Indicators (Daily)

- Operational metrics for execution

- Primarily leading indicators

- Example: “Tasks completed today”

Indicator Selection by Outcome Type

| Outcome Type | Leading Indicators | Lagging Indicators |

|---|---|---|

| Revenue | Pipeline value, inquiry velocity, proposal count | Closed revenue, ARR |

| Brand Recognition | Content reach, engagement rate, press mentions | Inbound attribution, NPS |

| Capability Build | Training completed, documentation coverage | Process efficiency, error rate |

| Knowledge Validation | Downloads, citations, feedback quality | Inquiry source attribution |

The Indicator Cascade

Thresholds shown are examples—set them based on your goals and baseline.

Good governance creates an indicator cascade:

Outcome: "Generate £50K recurring annual revenue"

├── Target Metric: ARR >= £50,000 (lagging)

├── Leading Indicator: Qualified inquiries per month >= 5

│

├── Initiative: "Prospect Marketing Campaign"

│ ├── Success Metric: 20 qualified inquiries (lagging for initiative)

│ ├── Leading Indicator: Content engagement rate >= 3%

│ │

│ └── Task Indicators (via MIR):

│ ├── Content pieces published per week

│ ├── Outreach messages sent

│ └── Response rateWhen task indicators slip, investigate. When initiative indicators slip, escalate. When outcome indicators slip, reassess strategy.

Governance Rhythms

Daily: Task Focus

- Work from Today View (HF tasks + imminent deadlines)

- Every task shows its initiative linkage

- Orphan tasks get immediate rehoming or killing

- No strategic decisions—pure execution

Weekly: Initiative Review

- Review active initiatives by status

- Check initiative indicators against targets

- Identify blocked/at-risk initiatives

- Decide: accelerate, continue, pause, or kill

- Update Progress Notes on affected outcomes

Monthly: Outcome Review

- Review outcome indicators across all value streams

- Portfolio balance check (distribution across streams)

- Identify outcomes at risk of missing target dates

- Strategic decisions: add initiatives, reassign resources, adjust targets

- Update outcome status: Active → At Risk, or At Risk → Active

Quarterly: Strategic Review

- Which outcomes were achieved, deferred, or cancelled?

- Which value streams are over/under-invested?

- What should next quarter’s outcome priorities be?

- Archive completed initiatives with learnings

- Set new initiatives for next quarter

Implementation: The Minimum Viable Hierarchy

You don’t need complex software to implement the Strategic Outcomes Framework. Here’s the minimum structure:

Database 1: Strategic Outcomes

Properties:

- Name (title)

- Outcome Type (select: Revenue, Brand Recognition, etc.)

- Value Stream (multi-select: 6 options)

- Status (status: Planning, Active, At Risk, Achieved, Deferred, Cancelled)

- Target Date (date)

- Target Metric (text)

- Progress Notes (rich text)

- Initiatives (relation → Initiatives database)Database 2: Initiatives

Properties:

- Name (title)

- Type (select: Campaign, Client Work, R&D, Internal Build)

- Status (status: Planning, Active, On Hold, Complete, Archived)

- Strategic Outcomes (relation → Outcomes database)

- Timeline Start (date)

- Timeline End (date)

- Success Criteria (text)

- Approach (text)

- Owner (person)

- Tasks (relation → MIR database)Database 3: MIR (Tasks)

Existing properties, plus:

- Initiative (relation → Initiatives database)Key Views

Outcome Dashboard: All outcomes, grouped by Value Stream, showing status and initiative count.

Initiative Portfolio: All active initiatives, grouped by Outcome, showing status and task count.

Orphan Tasks: MIR tasks where Initiative is empty. Goal: zero orphans.

At Risk Review: Outcomes and Initiatives with At Risk status. Weekly focus list.

Integration with Multi-Clock Work

The Strategic Outcomes Framework extends Multi-Clock Work, not replaces it.

Multi-Clock governs temporal rhythm: Which tasks to work on today (HF), this week (LF), or review monthly (Dormant).

Strategic Outcomes governs purpose: Why those tasks matter and what they’re building toward.

Together:

| Clock | Outcome Integration |

|---|---|

| HF Tasks | Must link to active initiatives advancing active outcomes |

| LF Tasks | May link to planning-stage initiatives or support outcomes |

| Dormant | Ideas awaiting outcome linkage; reviewed for promotion when outcomes shift |

The Bandit Score Enhancement

The Bandit Score prioritizes within outcome-linked work:

- Revenue Potential → Higher for tasks in initiatives advancing Financial Performance outcomes

- Portfolio Value → Higher for tasks in initiatives advancing Capability or Brand outcomes

- Engagement Signal → Higher for tasks showing external validation toward outcome

- Exploration Bonus → Higher for tasks in R&D initiatives testing new approaches

The hierarchy doesn’t change how Bandit Score works—it enriches the signals that feed it.

Common Failure Modes

Failure Mode 1: Too Many Outcomes

Symptom: 20+ active outcomes, each with 1-2 initiatives.

Cause: Confusing outcomes with initiatives.

Fix: Consolidate. If it can be done in less than 3 months, it’s an initiative, not an outcome.

Failure Mode 2: Orphan Initiatives

Symptom: Initiatives without outcome linkage.

Cause: Creating initiatives before defining outcomes.

Fix: Require outcome linkage before initiative creation. Retroactively link or kill orphans.

Failure Mode 3: Zombie Outcomes

Symptom: Outcomes with no active initiatives for 3+ months.

Cause: Strategic ambition without resource commitment.

Fix: Either resource with initiatives or defer the outcome. Unresourced outcomes create guilt without progress.

Failure Mode 4: Indicator Blindness

Symptom: Outcomes with no defined indicators, or indicators never reviewed.

Cause: Treating outcomes as aspirations rather than measurable goals.

Fix: Every outcome needs at least one leading and one lagging indicator. Review monthly.

Failure Mode 5: Linkage Theater

Symptom: Everything links to everything; linkage is administrative, not meaningful.

Cause: Treating linkage as checkbox compliance rather than governance.

Fix: If a task truly advances multiple initiatives, pick the primary. If an initiative truly advances multiple outcomes, that’s fine—but 5+ linkages suggests the initiative is too broad.

The Purpose Test

After implementation, every team member should pass the Purpose Test:

Point to any active task. In two sentences, explain:

- Which initiative does this advance?

- Which strategic outcome does that initiative serve?

If anyone can’t answer, the golden thread is broken. Find the break and fix it.

When everyone can answer, you have a strong signal you’re closing the Purpose Gap: tasks trace to purpose, and effort is more consistently directed at what matters.

Summary

The Strategic Outcomes Framework provides four layers of governance:

- Value Streams ensure balanced portfolio investment

- Strategic Outcomes define what success looks like

- Initiatives bound the programs that advance outcomes

- Tasks do the work, with explicit initiative linkage

The golden thread connects them: any task can trace back to strategic purpose in two sentences.

Combined with Multi-Clock Work (temporal governance) and Bandit Scoring (priority governance), the Strategic Outcomes Framework completes the Multi-Clock operating system for creative studios.

Your tasks already get done. This framework can help them add up to something coherent.